By Vikram Umachagi I ECD Technical Marketing and Performance Engineer, Broadcom Inc

Technology Overview

Oracle TimesTen In-Memory Database

In-memory databases have been around for a long time. They operate by managing data in random access memory (RAM) instead of the non-volatile storage that traditional relational database management system (RDBMS) databases rely on. An in-memory database runs completely from system main memory, thus improving the speed of transactions with dramatic gains in responsiveness and throughput. Unfortunately, this comes with the added cost of storing the data in system memory (RAM), which is also limited in capacity.

Since data volumes are constantly increasing, so does the cost of in-memory databases, so their use is typically limited to specific applications demanding real-time, high-volume online transaction processing (OLTP) in industries such as telecom, financial services, and location-based services.

Gen 7 Fibre Channel

Improved performance in any application requires changing the underlying hardware, which can also deliver higher capacity at scale. For faster compute, the improvements are delivered by the latest CPUs and memory technologies. For faster storage, new flash-based storage arrays have significantly improved access times. For faster applications, a faster interconnect is also required. This blog discusses one example of a modern application or workload accelerated by a faster interconnection technology, the Gen 7 Fibre Channel (FC) Storage Area Network (SAN) protocoly. Fibre Channel is the most trusted high-speed networking technology used in data centers today. The latest, seventh generation of Fibre Channel provides double the throughput from 32G (in Fibre Channel 6?) to 64G.

Business Challenge

In-memory databases need persistent storage to store the backup, logs, snapshots, and redo operations, etc., required to recover from power outages and server downtime issues. This means that one copy of the data in some format has to be stored in persistent storage. For enterprise data centers, that usually means their persistent storage is a Fibre Channel SAN.

The data stored as persistent storage on a Fibre Channel SAN has to be loaded into main memory (RAM) at database startup after planned or unplanned server downtime. As the scale of a database increases, this usually means hours or even days are needed to restore the database into main memory before the database can start serving users.

Solution Overview

Benefits of Gen 7 Fibre Channel

Startup of an in-memory database requires moving all the database data residing in SAN storage to the host memory. The sooner that’s done, the sooner the application is back online. Time for loading the database into RAM will depend on system capacity and database size. When using a Gen 7 FC Host Bus Adapter (HBA) you can . double the throughput over a Gen 6 FC HBA.

We were curious to see if that advantage would hold up in the real world amid variations in system design and components. Hence, we put the newer HBA to the test and benchmarked 64G and 32G performance for this blog.

Solution Architecture

Performance

A sample workload was created using an x86 Intel Eagle Stream server connected to an all-flash array (Figure 1). The SAN array provided the permanent storage used by the Oracle TimesTen Database (TimesTen). The TimesTen database was installed on the server. The database was filled via the sample data generator application provided by the TimesTen developer website located here.

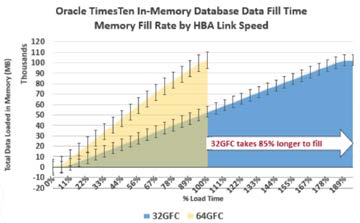

This data was stored in the main memory of the server for faster access along with redo logs and checkpoint data, which gets written to the file system mapped from the Fibre Channel SAN array. After the server restart, the database services started reading the data from permanent storage into main memory through the FC SAN. TimesTen allows for the parallel reading of the checkpoints to load the data faster. As the data is read in parallel from multiple checkpoint files, the transfer of data was limited by the throughput of the FC technology in use, i.e. 32G for Gen 6 FC and 64G for Gen 7 FC.

Even for a small database with around 100GB of data in checkpoint files, we saw that the time taken for data transfers was 85% longer with 32G than 64G (Figure 2). In a large data center deployment we would expect to see an even greater improvement in data transfer time with 64G compared to small test setup used here.

Conclusion

In the dynamic world of software applications, startup times play a crucial role in user experience. The symbiosis between software applications and hardware leads to optimal performance. This need for instant engagement drives the convergence of advanced technologies. As demonstrated in testing, the Gen 7 FC HBA supercharged the startup of the TimesTen database and provided an applause-worthy performance.