Rupin Mohan, Director R&D, Hewlett Packard Enterprise

Introduction

Storage has served as the core example of disruptive technologies in a paper by Prof. Clayton Christenson of Harvard Business School. Prof. Christenson practically invented the term “Disruptive Technologies” with his HBS paper “Disruptive Technologies, Catching the Wave” in 1995 using the disk storage drive industry as the prime example. The key metrics for the storage industry for the past two decades were 1) size of disk and 2) dollars per megabyte (MB). When the size of the disk fell from 14 inches to 3.5 inches, the leading and established disk manufacturers always were disrupted by a smaller and more nimble startup company.

Fast forward to 2016, and the key metrics, in focus, for storage performance have transitioned to:

- I/O’s per second (IOPs)

- Bandwidth in MBs/sec

- I/O operation Latency

IOPS – I/O’s per second

– A measure of the total I/O operations (reads and writes) issued by the application servers.

Bandwidth

– A measure of the data transfer rate, or I/O throughput, measured in bytes per second or Megabytes per second (MBPS).

Latency

– A measure of the time taken to complete an I/O request, also known as response time. This is frequently measured in milliseconds (one thousandth of a second) or, for extremely high-performance technology, in microseconds (one millionth of a second). Latency is introduced into the SAN at many points, including the server operating system and HBA, SAN switching, and at the storage target(s) and media and is, in general, undesirable in any system.

While the physical size of the disks have stabilized, the dollars-per-MB has transitioned to dollars-per-gigabyte (GB) now as storage continues to substantially drop in price. That metric is, of course, still very important, but there is clear expectation prices will drop as volumes for new drives increase.

Similar to the electric car re-inventing the automobile industry, there are transformations in the storage industry that profoundly affect advances in latency, IOPs, and bandwidth.

Take, for example, the growing popularity of flash storage and solid state drives (SSDs) in the enterprise storage market. Flash has clearly disrupted the spinning media/ classic disk drive industry. This time, the disk drive industry was disrupted not by size of the disk, but by a complete shift in storage technology. Instead of storing data using ferrite heads on oxide disks, these new flash drives store data on non-volatile memory semiconductor chips. Additionally, there is one big difference with these new SSD disks: disk access is extremely fast, as the media does not have to spin at thousands of RPMs and the disk heads don’t have to move around.

Historically, the spinning disk drive was the lowest common denominator in storage performance. Storage experts were quick in calculating the max performance of a virtualized storage array based on the number of spindles (a.k.a disk drives) behind the storage controllers. However, this tended to mask areas of improvement that were necessary inside the storage stack, areas exposed by the invention and use of SSDs. No longer is the spinning disk drive the lowest common denominator in storage performance. Now, we find that several other technologies and protocols need to be improved and enhanced.

One of these areas involves the actual method by which the storage media is accessed. Using a protocol designed for spinning disks to access flash leaves us with a key question: can we improve the way that host applications and CPUs can access non-volatile (NV) storage media?

Advent of NVMe

The SCSI protocol has been the bed rock foundation of all storage for nearly three decades and it has served customers admirably. SCSI protocol stacks are ubiquitous across all host operating systems, storage arrays, devices, test tools etc.

It’s not hard to understand why: SCSI is a high performance protocol. Flash and SSDs have challenged the performance limits of SCSI as these disks do not have to rotate media and move disk heads. Hence what you find is that traditional max I/O queue depth of 32 or 64 outstanding SCSI READ or WRITE commands are now proving to be insufficient, as SSDs are capable of servicing a much higher number of READ or WRITE commands in parallel.

To address this, a consortium of industry vendors began work on the development of the Non Volatile Memory Express (NVM Express, or NVMe) protocol. The key benefits of this new protocol is that a storage subsystem or storage stack will be able to issue and service thousands of disk READ or WRITE commands in parallel, with greater scalability than traditional SCSI implementations. The effects are greatly reduced latency as well as dramatically increased IOPs and MB/sec metrics.

Shared Storage with NVMe

The next hurdle facing the storage industry is how to deliver this level of storage performance, given the new metrics, over a storage area network (SAN). While there are a number of pundits forecasting the demise of the SAN, sharing storage over a network has a number of benefits that many enterprise storage customers enjoy and are reluctant to give up. Without having to go into details, the benefits of shared storage over a storage area network are:

- More efficient use of storage, which can help avoid “storage islands”

- Offering a full featured, mature storage services like snapshots, backup, replication, thin provisioning, de-duplication, encryption, compression, etc.

- Enabling advanced cluster applications

- Offering multiple levels of disk virtualization and RAID levels

- Offering no single point of failure

- Ease of management with storage consolidation

The challenge facing the storage industry is to develop a really low latency storage area network (SAN) that can potentially deliver these improved I/O metrics.

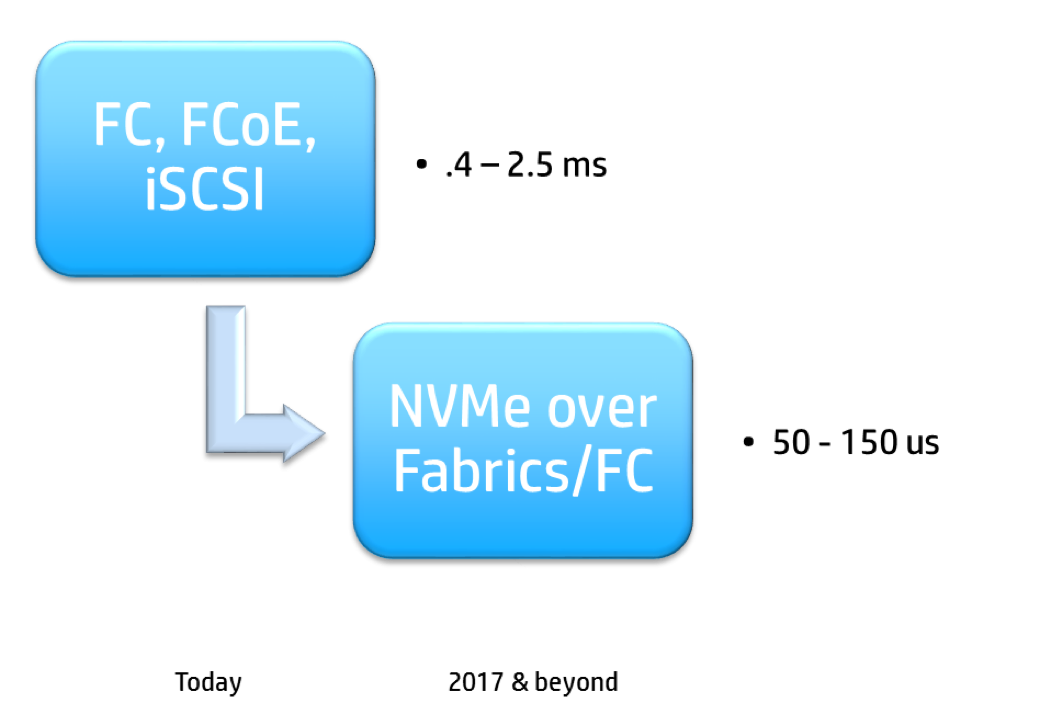

The latency step function challenge is described in the following chart:

Latency Step Function

There are two new projects in development in the industry that can solve this challenge. These two projects are:

- NVMe over Fabrics – Defined by the NVM Express group

- NVMe over Fibre Channel (FC-NVMe) – New T11 project to define an NVMe over Fibre Channel Protocol mapping

NVMe over Fibre Channel is a new T11 project that has engineers from leading storage companies actively working on a standard. Fibre Channel is a transport that has traditionally solved the problem of SCSI over longer distances to enable shared storage. Fibre Channel, in a simple way, transports SCSI READ or WRITE commands, and corresponding data statuses over a network. The T11 group is actively working on enabling the protocol to compatibly transport NVMe READ or WRITE commands over the same FC transport.

Conclusion

NVMe will increase storage performance by orders of magnitude as the protocol and products come to life and mature with product lifecycles. Transporting NVMe over a network to enable shared storage is an additional area of focus in the industry. Using an 80/20% example, Fibre channel protocols solve 80% of this FC-NVMe over fabrics problem with existing protocol constructs, and the T11 group has drafted a protocol mapping standard and is actively working on solving the remaining 20% of this problem.